项目作业-异步执行速度

相关代码:

#!/usr/bin/env python3

# -*- coding=utf-8 -*-

import asyncio

import aiohttp

import re

from lxml import etree

import pymongo

# 请求并将数据丢到html_queue的队列中

async def request(session, html_queue, sem, url_queue):

# 传入处理协程数量

async with sem:

# 取出url_queue的数据

url = await url_queue.get()

# 异步的方式去request豆瓣的电影页面的HTML

async with session.get(url) as resp:

data = await resp.text()

# 将数据丢入到html_queue的队列中

await html_queue.put(data)

# 将html_queue队列中的数据取出,处理后写入到DB中

async def handle(html_queue, lock, mycollection):

# 将队列中的数据取出

html_text = await html_queue.get()

# 将数据丢到etree中处理

html = etree.HTML(html_text)

for li_number in range(1, 26):

#################### 整理数据 ####################

# 整理相关电影资讯

movie_path = html.xpath(

f'// *[ @ id = "content"] / div / div[1] / ol / li[{li_number}] / div / div[2] / div[1] / a/span/text()')

movie_name_list = []

for name in movie_path:

# 将电影名称的空白消除

movie_name_list.append(re.compile(r'(\s)+/(\s)+').sub('/', name))

movie_name = ''.join(list(filter(None, movie_name_list)))

actors_path = html.xpath(f'//*[@id="content"]/div/div[1]/ol/li[{li_number}]/div/div[2]/div[2]/p/text()')

ac_list = []

for ac in actors_path:

# 将演员资讯之间的空白消除,以及换行消除

ac_result = re.compile(r'(\s)/(\s)').sub('/', re.compile(r'[\n]|[\s]{2,}').sub('', ac))

ac_list.append(ac_result)

actors_information = ''.join(list(filter(None, ac_list)))

score_path = html.xpath(

f'//*[@id="content"]/div/div[1]/ol/li[{li_number}]/div/div[2]/div[2]/div/span[2]/text()')

score = ''.join(score_path)

evaluate_path = html.xpath(

f'//*[@id="content"]/div/div[1]/ol/li[{li_number}]/div/div[2]/div[2]/div/span[4]/text()')

evaluate = ''.join(evaluate_path)

describe_path = html.xpath(

f'//*[@id="content"]/div/div[1]/ol/li[{li_number}]/div/div[2]/div[2]/p[2]/span/text()')

describe = ''.join(describe_path)

# 创建一个字典将资料都放入其中

info = {

'movie_name': movie_name,

'actors_information': actors_information,

'score': score,

'evaluate': evaluate,

'describe': describe

}

# 将数据丢到DB中

async with lock:

mycollection.insert_one(info)

# 定义异步主程序

async def main():

import time

start_time = time.time()

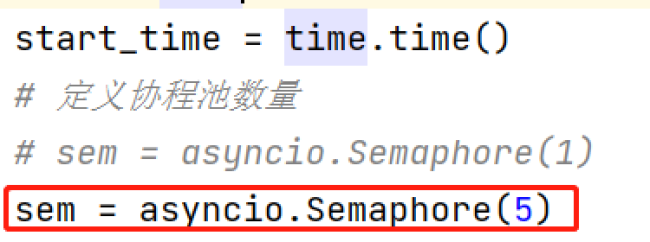

# 定义协程池数量

sem = asyncio.Semaphore(1)

header = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3",

"Accept-Encoding": "gzip, deflate",

"Accept-Language": "zh-CN,zh;q=0.9",

"Connection": "keep-alive",

"Host": "movie.douban.com",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36"

}

# 生成url数据,并丢到url_queue队列中

url_queue = asyncio.Queue()

for page in range(0,226, 25):

url = f"https://movie.douban.com/top250?start={page}"

await url_queue.put(url)

# 调用request异步函数

html_queue = asyncio.Queue()

async with aiohttp.ClientSession(headers=header) as session:

while not url_queue.empty():

await request(session, html_queue, sem, url_queue)

# 实例化mongo client

m_client = pymongo.MongoClient('mongodb://account:password@172.16.1.10:27017')

mydb = m_client['movie']

mycollection = mydb['movie_info']

# 生成lock,并调用handle异步函数

lock = asyncio.Lock()

while not html_queue.empty():

await handle(html_queue, lock, mycollection)

end_time = time.time()

print(end_time-start_time)

if __name__ == '__main__':

# 开始循环

loop = asyncio.get_event_loop()

loop.run_until_complete(main())问题描述:

你好,为什么通过异步爬取会比多线程还要慢?

我的理解是每次异步request之后,就会马上换下一个request,因此并不会阻塞IO

而多线程则是通过大量的线程去减少阻塞IO的时间

我认为两者的时间在这个作业里面应该是差不多的,但是实际运行异步所消耗的时间约为多线程的两倍,让我不知道是不是异步哪里的逻辑出了问题,可能实际上还是有阻塞的部份我没看出来

因此想请教一下

29

收起

正在回答 回答被采纳积分+1

Python全能工程师

- 参与学习 人

- 提交作业 16425 份

- 解答问题 4469 个

全新版本覆盖5大热门就业方向:Web全栈、爬虫、数据分析、软件测试、人工智能,零基础进击Python全能型工程师,从大厂挑人到我挑大厂,诱人薪资在前方!

了解课程

恭喜解决一个难题,获得1积分~

来为老师/同学的回答评分吧

0 星