关了视频自己写遇到了一点问题求解

# https://search.51job.com/list/150200,000000,0000,00,9,99,python,2,1.html

import requests

import threading

from multiprocessing import Queue

import re

import time

# 页码处理类

class page_url(threading.Thread):

def __init__(self, thread_name, page_queue, data_queue):

super(page_url, self).__init__()

self.thread_name = thread_name

self.page_queue = page_queue

self.data_queue = data_queue

self.header = {

"Host": "jobs.51job.com",

"Connection": "keep-alive",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.102 Safari/537.36",

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"Sec-Fetch-Site": "none",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-User": "?1",

"Sec-Fetch-Dest": "document",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "zh-CN,zh;q=0.9"

}

def run(self):

print("线程启动:{0}".format(self.thread_name))

while not self.page_queue.empty():

try:

page = self.page_queue.get_nowait()

except:

pass

else:

url = "https://search.51job.com/list/150200,000000,0000,00,9,99,python,2,"+str(page)+".html"

response = requests.get(url=url, headers=self.header)

self.data_queue.put(response.text)

# 文本处理类

class data_html(threading.Thread):

def __init__(self, thread_name, data_queue):

super(data_html, self).__init__()

self.thread_name = thread_name

self.data_queue = data_queue

# self.lock = lock

def run(self):

print("线程启动:{0}".format(self.thread_name))

# 测试data_queue队列的内容,发现是空的,加入time.sleep(10)就不是空的了

print(self.data_queue.qsize())

while not self.data_queue.empty():

try:

text = self.data_queue.get_nowait()

except:

pass

else:

data_search = re.compile(r"window\.__SEARCH_RESULT__\s=\s(.*?)</script>")

data = data_search.search(text)

job_items = data.group(1)

# print(job_items)

def main():

page_queue = Queue()

data_queue = Queue()

lock = threading.Lock()

for page in range(1,11):

# url = "https://search.51job.com/list/150200,000000,0000,00,9,99,python,2,"+str(page)+".html"

page_queue.put(page)

thread_url_name_list = ["页码处理线程1号","页码处理线程2号"]

thread_url_name_end_list = []

for thread_url_name in thread_url_name_list:

thread_page = page_url(thread_name=thread_url_name, page_queue=page_queue, data_queue=data_queue)

thread_page.start()

thread_url_name_end_list.append(thread_page)

while page_queue.empty():

for url_end in thread_url_name_end_list:

url_end.join()

thread_html_name_list = ['html处理线程1号', 'html处理线程2号']

thread_html_name_end_list = []

for thread_html_name in thread_html_name_list:

thread_html = data_html(thread_name=thread_html_name, data_queue=data_queue)

thread_html.start()

thread_html_name_end_list.append(thread_html)

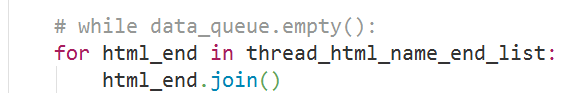

while data_queue.empty():

for html_end in thread_html_name_end_list:

html_end.join()

if __name__ == "__main__":

main()

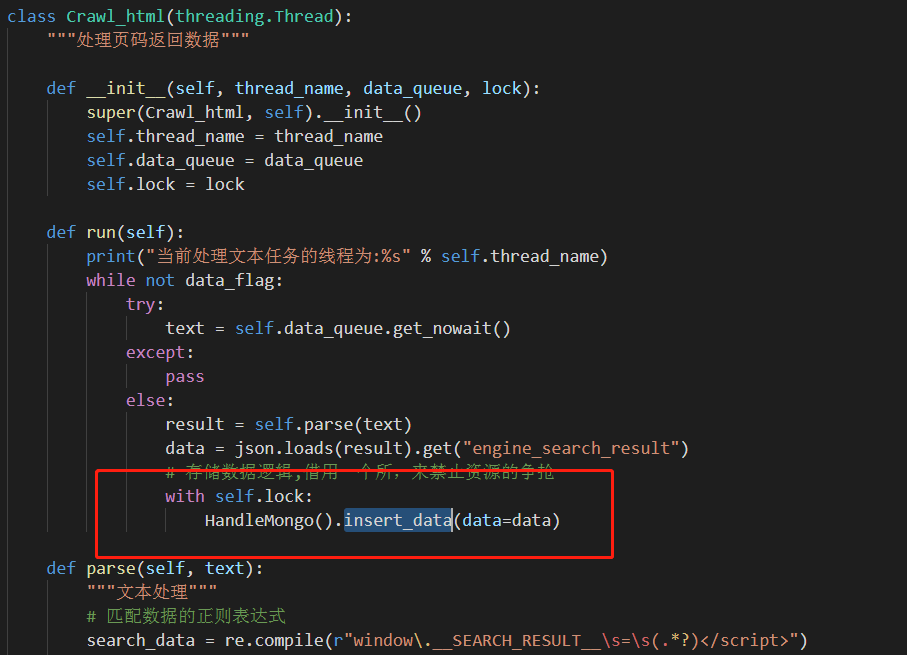

没有按视频那样设置全局变量page_flag = False 和 data_flag = False 来作为循环开始的条件,取而代之的思路是认为队列不空 就循环取。测试的结果 页码处理类正常,但是文本处理类发现问题:在从data_queue中get数据之前,需要等待一段时间,否则data_queue没有数据。

问题1:为什么data_queue需要等待一段时间才有数据?

问题2:老师视频中也没看到等待,却可以正常从data_queue中获取数据,这是为什么?是我视频看的不仔细么。。。

问题3:这段代码反复运行的话,有时候会碰到报错:BrokenPipeError: [WinError 109] 管道已结束。

查询是这样解释的:多进程通信用了队列,另一个进程关闭后,再用队列就会报这个错。

没太理解,这里不是线程么,我线程有关了后再用队列么?

20

收起

正在回答

2回答

同学,你好!

1. 根据同学提供的代码,同学没有加锁,所以多个线程争抢时间片,获取数据速度就会延迟

2. 同学写的是一个死循环,当while循环成立时,才会执行for循环中的代码,挂起等待子线程执行结束,若没有join(),主线程可能会比子线程执行的快,从而主线程结束

加油,祝学习愉快~~~

4.入门主流框架Scrapy与爬虫项目实战

- 参与学习 人

- 提交作业 107 份

- 解答问题 1672 个

Python最广为人知的应用就是爬虫了,有趣且酷的爬虫技能并没有那么遥远,本阶段带你学会利用主流Scrapy框架完成爬取招聘网站和二手车网站的项目实战。

了解课程

恭喜解决一个难题,获得1积分~

来为老师/同学的回答评分吧

0 星