import requests

import re

from multiprocessing import Queue

import threading

class Crawl_page(threading.Thread):

'''用于给页码发送请求'''

#请求的url https://search.51job.com/list/000000,000000,0000,00,9,99,python,2,1.html

def __init__(self,thread_name,page_queue,data_queue):

super(Crawl_page,self).__init__()

#线程名称

self.thread_name = thread_name

#页码队列

self.page_queue = page_queue

#数据队列

self.data_queue = data_queue

#定义一个请求头

self.header = {

"Host": "jobs.51job.com",

"Connection": "keep-alive",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.193 Safari/537.36",

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"Sec-Fetch-Site": "none",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-User": "?1",

"Sec-Fetch-Dest": "document",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "zh-CN,zh;q=0.9",

}

#该线程要做的事情

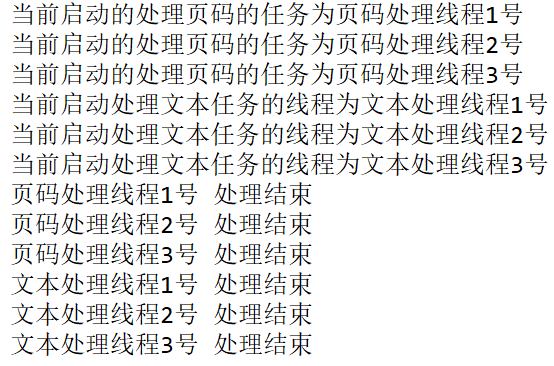

def run(self):

'''多线程的启动方法'''

print('当前启动的线程名称:{}'.format(self.thread_name))

#使用nowait不会阻塞,当队列无数据会抛异常

while not page_flag:

try:

#获取页码

page = self.page_queue.get_nowait()

except:

pass

else:

# pass

print('当前页码:{}'.format(page))

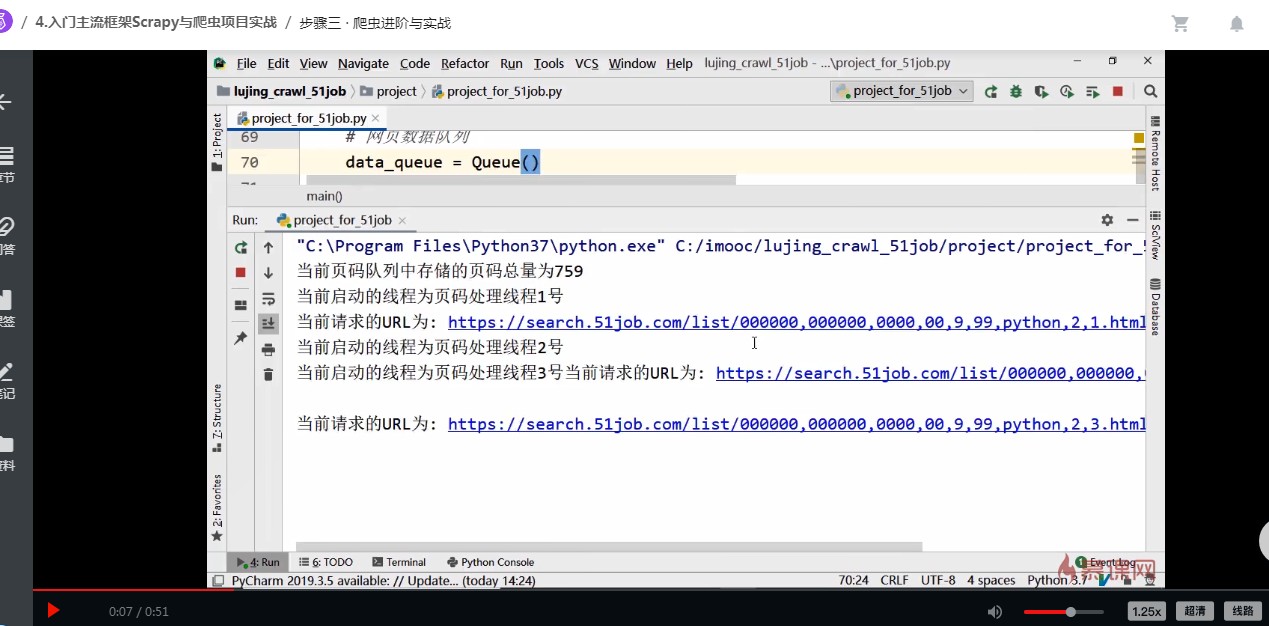

page_url = 'https://search.51job.com/list/000000,000000,0000,00,9,99,python,2,'+str(page)+'.html'

print('当前请求页面是:{}'.format(page_url))

response = requests.get(url=page_url,headers=self.header)

response.encoding = 'gbk'

self.data_queue.put(response.text)

# 报错信息的截图

# 报错信息的截图

恭喜解决一个难题,获得1积分~

来为老师/同学的回答评分吧

0 星