爬虫时出现问题

import requests

from lxml import etree

import threading

from queue import Queue

import pymongo

#从每页数据中解析出各电影详情页数据

class PageParser(threading.Thread):

def __init__(self,thread_name,page_queue,data_queue):

super(PageParser,self).__init__()

self.thread_name=thread_name

self.page_queue=page_queue

self.data_queue=data_queue

def handle_requests(self,page_url):

header={

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.159 Safari/537.36"

}

response=requests.get(url=page_url,headers=header)

if response.status_code == 200 and response:

return response.text

#处理页面数据

def parse_page(self,content):

html=etree.HTML(content)

page_all_data=html.xpath("//div[@class='article']/ol/li")

for li in page_all_data:

self.data_queue.put(li) # 将解析出的各电影数据放入data_queue队列中

print(li,type(li))

def run(self):

print(f"{self.thread_name} has started")

try:

while not self.page_queue.empty():

page_url=self.page_queue.get()

page_response=self.handle_requests(page_url)

if page_response:

self.parse_page(page_response)

except Exception as e:

print(f"{self.thread_name} met error: {e}")

print(f"{self.thread_name} has ended")

class DataParser(threading.Thread):

def __init__(self,thread_name,data_queue,mongo,lock):

super(DataParser,self).__init__()

self.thread_name=thread_name

self.data_queue=data_queue

self.mongo=mongo

self.lock=lock

def handle_list_one(self,content):

return ''.join(content)

def handle_list_some(self,content):

return '/'.join(content)

def parse_data(self,content):

data={

"movie_name":self.handle_list_some(content.xpath("//div[@class='info']/div[@class='hd']/a/span/text()")),

"actors_information": self.handle_list_some(content.xpath("//div[@class='info']/div[@class='bd']/p[1]/text()")),

"score":self.handle_list_one(content.xpath("//div[@class='info']/div[@class='bd']/div/span[@class='rating_num']/text()")),

"evaluate": self.handle_list_one(content.xpath("//div[@class='info']/div[@class='bd']/div/span[@class='']/text()")),

"describe":self.handle_list_one(content.xpath("//div[@class='info']/div[@class='bd']/p[2]/span/text()"))

}

with self.lock:

self.mongo.insert_one(data)

def run(self):

print(f"{self.thread_name} has started")

try:

while not self.data_queue.empty():

datas=self.data_queue.get()

self.parse_data(datas)

except Exception as e:

print(f"{self.thread_name} met error: {e}")

print(f"{self.thread_name} as ended")

def main():

page_queue=Queue()

for i in range(0,26,25): #TODO:测试后修改

page_url=f"https://movie.douban.com/top250?start={i}&filter="

page_queue.put(page_url)

data_queue=Queue()

page_thread_names=["P1","P2","P3"]

page_thread_recover=[]

for thread_name in page_thread_names:

thread=PageParser(thread_name,page_queue,data_queue)

thread.start()

page_thread_recover.append(thread)

while not page_queue.empty():

pass

for thread in page_thread_recover:

if thread.is_alive():

thread.join()

#建立mongodb的连接

myclient = pymongo.MongoClient("mongodb://127.0.0.1:1112")

mydb = myclient["TOP250"]

mycollection = mydb["movie_info"]

lock=threading.Lock()

data_thread_names = ["DE1", "DE2", "DE3", "DE4", "DE5"]

data_thread_recover = []

for thread_name in data_thread_names:

thread = DataParser(thread_name, data_queue, mycollection, lock)

thread.start()

data_thread_recover.append(thread)

while not data_queue.empty():

pass

for thread in data_thread_recover:

if thread.is_alive():

thread.join()

if __name__=="__main__":

main()

代码如上,主要按照之前课程的案例进行编写。

但在实际的爬虫中会出现几个问题:

重复爬取同一部电影的信息

爬取到的评分和实际不符

爬取不到评价人数的数据

想问一下代码中存在什么问题?

34

收起

正在回答

1回答

同学,你好!

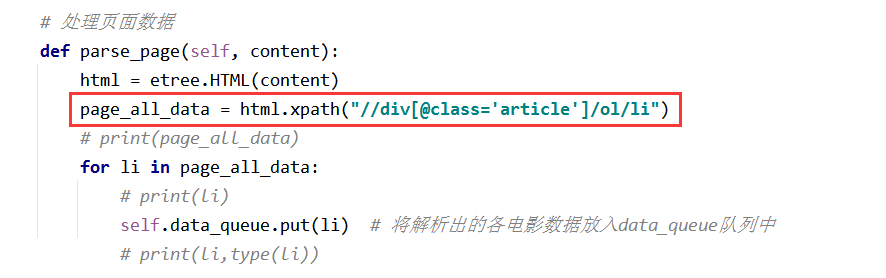

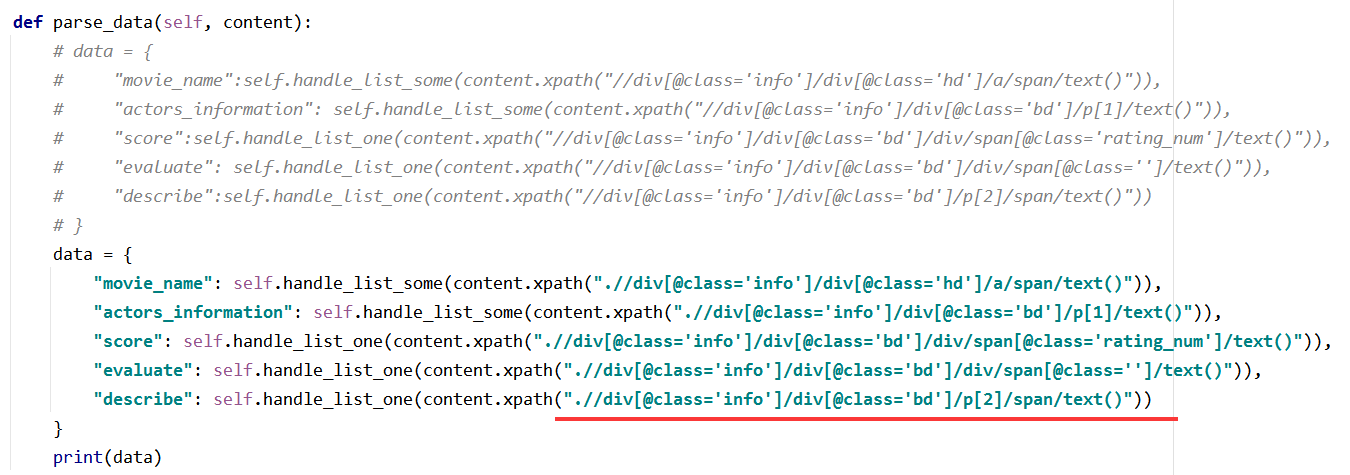

1、data_queue中存储都是xpath获取的页面数据数据,解析数据时,先从data_queue中取出数据,在之前xpath节点的基础上进行解析,同学的代码parse_data()方法中的xpath(//div)是直接从网页中解析,因此会获取到重复的所有数据,可参考以下代码修改,爬取到评分也将是正确的

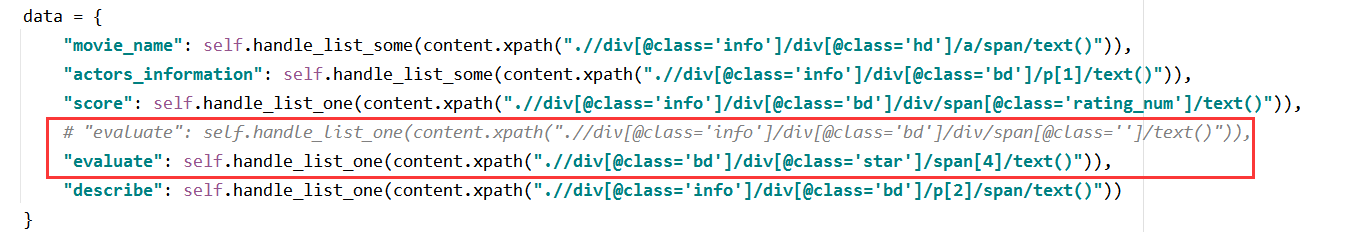

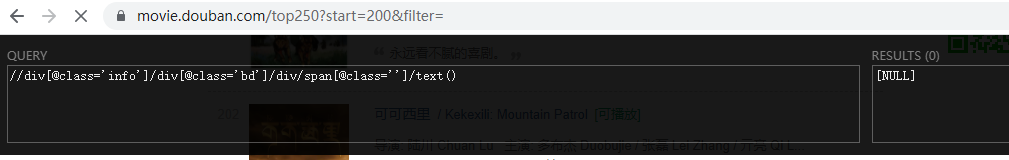

2、评价人的xpath语句需要调整一下,可参考以下写法

祝:学习愉快!

Python全能工程师

- 参与学习 人

- 提交作业 16425 份

- 解答问题 4469 个

全新版本覆盖5大热门就业方向:Web全栈、爬虫、数据分析、软件测试、人工智能,零基础进击Python全能型工程师,从大厂挑人到我挑大厂,诱人薪资在前方!

了解课程

恭喜解决一个难题,获得1积分~

来为老师/同学的回答评分吧

0 星